Ultimate Guide how ChatGPT, Perplexity and Claude use Your Data

AI Data use is at an all-time high and data privacy & confidentiality is incredibly important when using AI models. Before you add text or documents to AI Models, read below how and when these platforms might use your content. We also list what content to avoid adding to the models and why AI Policies can be incredibly helpful.

Recently, I had a discussion with a lawyer who shared full client documents, including detailed confidential information, with an AI tool. He was completely unaware that the data we feed into AI systems can be used by the model. That conversation made me realize something important: Not everyone knows how large language models (LLMs) like ChatGPT, Claude and Perplexity actually handle the content we provide.

This is why I wrote this article “Ultimate Guide how ChatGPT, Perplexity and Claude use Your Data”, showing when ChatGPT, Perplexity and Claude uses (or does not use) Your Data.

What we will cover:

- Why businesses, law firms, and other organizations need comprehensive AI policies

- How free and paid versions of ChatGPT differ

- How Claude and Perplexity compare in terms of data handling

- Why you should never share private or proprietary content

The below is an ongoing research project, comparing AI models. Please do not treat the below as legal advice. Always verify current policies to ensure compliance with your (organization’s) requirements – carefully review relevant documentation to understand if and how they might use your input to refine their algorithms.

2. What Are AI Models Learning From? Our Data!

It’s common knowledge that AI models, including ChatGPT, Claude, and Perplexity, are initially trained on large datasets (e.g., internet text, books, articles). However, many people don’t realize that these models can also learn from the additional content we submit. This includes for example when we’re asking them to:

- Analyze or summarize data (e.g., uploading sections of a contract)

- Write or edit an article (e.g., pasting confidential notes)

- Generate ideas or code (e.g., providing business-critical snippets)

Whenever you input text into these systems, it may be used – depending on the model and the plan you’re on – to further refine or train the AI. In this article, we’ll dig deeper into how various well-known models handle (or don’t handle) your content, so you can make more informed decisions when you’re working with sensitive data.

3. ChatGPT: Paid vs. Non-Paid Tiers

ChatGPT is currently the most widely used AI model – focusing on Chatbots / Generative AI. It is important to understand the difference between the paid and free models of OpenAI when focusing on data privacy, confidentiality and to answer the question whether Open AI uses your data to train their model.

ChatGPT (Free)

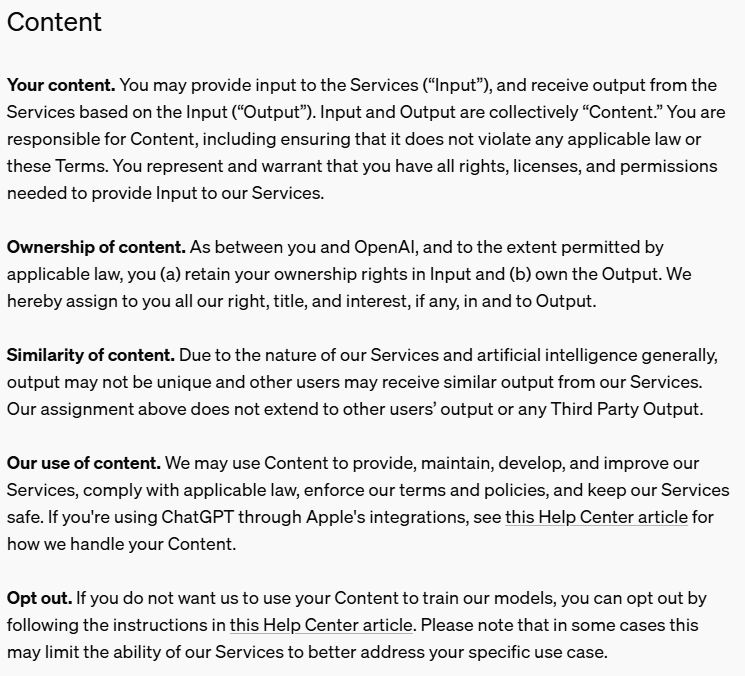

- Data Usage: Under the free model, OpenAI may use the content you provide to further train or refine the model. This means if you share potentially sensitive information, it could (in theory) be included in the AI’s training data. See the Terms of Use for OpenAI (Free) on this point – I add the relevant part relating to whether it will be able to use your Input in the image below.

- Key Takeaway: Be mindful of what you enter into the model. If it’s something you wouldn’t want to become part of the broader AI model or if it’s private, proprietary or personal, do not include it in your prompts. Also remember that it can be against the law to add certain content.

ChatGPT (Paid: Enterprise, API, and Other Business Solutions)

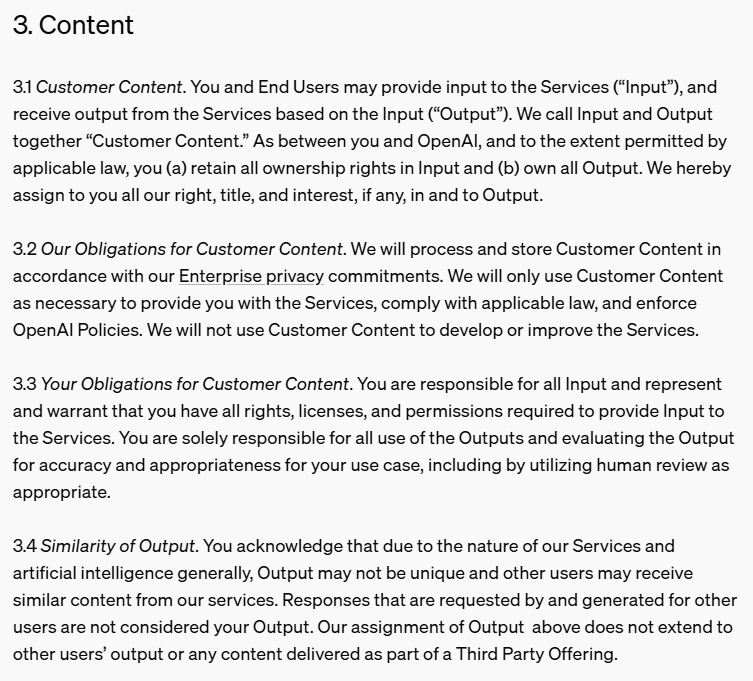

- Greater Privacy: According to OpenAI’s Enterprise Terms of Use, customer content is not used to develop or improve the service. See the Business Terms for OpenAI (Paid) on this point. See image below – I add the relevant part whether it will be able to use your data.

- Stronger Security: Enterprise clients typically get enhanced security measures, better data handling policies, and the option to opt out of data usage altogether.

- Who Should Consider This: Those handling confidential documents, proprietary business processes, or sensitive client data. Lawyers, for instance, might prefer the enterprise offering if they frequently need to process large volumes of privileged information. However, we should note that it is not certain that this Input & Content is secure. Currently, until further notice we would still advice everyone – especially lawyers, doctors, government employees and other that have access to sensitive information not to add such information in the AI models.

- Opt-out: If you do not want your data contributing to AI model improvements, go to the following link to opt-out: https://lnkd.in/dVPcMfH8

4. Comparing Other AI Models: Claude and Perplexity

Next to ChatGPT there are many other AI models that are used. As we will handle Gemini next time, we will go into the details on Claude and Perplexity in this Article.

Claude (by Anthropic)

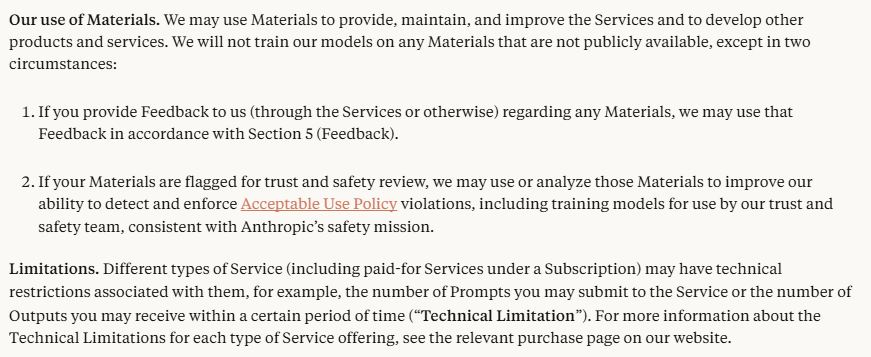

- Focus on Safety: Claude is well-known for its emphasis on AI safety and ethical guidelines. However, terms regarding data usage can still allow the model to analyze or store user inputs for system improvement unless otherwise specified. See the Consumer Terms of Service of Claude on this point. See image below – I add the relevant part whether it will be able to use your data.

- Paid Services: Anthropic offers enterprise solutions as well, which comes with a separate set of Commercial Terms containing stricter confidentiality and privacy protections. They have also added the following text in the Commercial Terms of Service: “Anthropic may not train models on Customer Content from paid Services“. Always check the most recent Terms of Service for precise details.

Perplexity

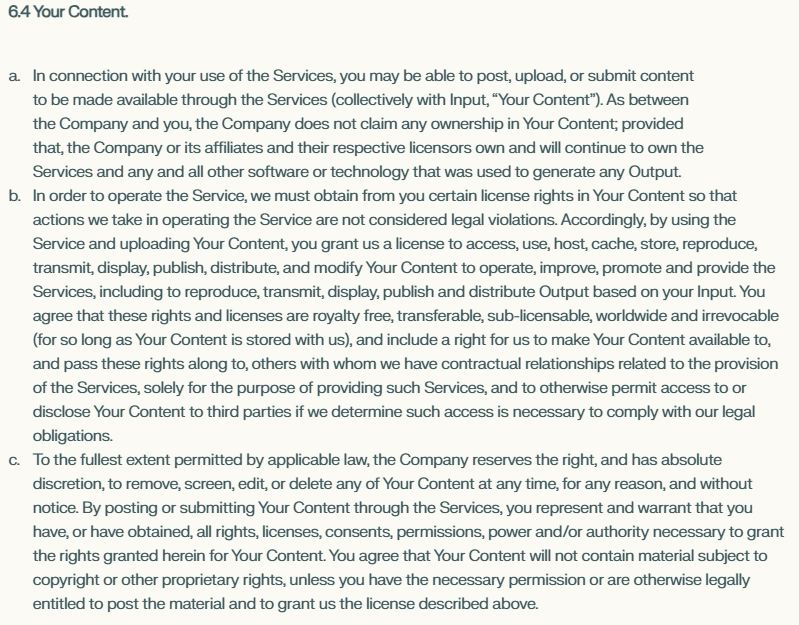

- Research-Oriented: Perplexity is built around providing concise, sourced answers. It has been explained to me as more the “Google” way of searching in AI models. It has been said that Perplexity may not store or use data exactly like ChatGPT. However, the Terms of Service seem to indicate that Perplexity will use your Content – amongst others – for training purposes. See relevant part of Art. 6.4 (b) here: “Accordingly, by using the Service and uploading Your Content, you grant us a license to access, use, host, cache, store, reproduce, transmit, display, publish, distribute, and modify Your Content to operate, improve, promote and provide the Services, including to reproduce, transmit, display, publish and distribute Output based on your Input. “ See image below – I add the relevant part whether it will be able to use your data.

- Enterprise Terms of Service: See these specific terms here. Enterprise customers do provide a license to Perplexity regarding their content. However, the following important wording is added: “Notwithstanding the foregoing, Perplexity does not and will not use Customer Content to train, retrain or improve Perplexity’s foundation models that generate Output.”

5. What Not to Upload to AI Tools

Whether you’re using ChatGPT, Claude, Perplexity, or any other AI platform, apply common sense and caution. Avoid sharing for example:

- Confidential details (client, business or family member names, private letters, contracts & strategies).

- Personally identifiable information (PII) (addresses, phone numbers, medical records).

- Proprietary or business-critical data (unreleased products, prices, financials).

- Sensitive materials (health info, internal memos, or business & personal).

- Data protected by Applicable Law (copyright, illegal data, government data).

If you must work with potentially sensitive text, consider (a) anonymizing the data first, (b) using an enterprise-level AI solution where the Terms of Use prohibit using your content for model training or (ii) an ‘on-premise’ AI model that anonymizes data.

6. Why Organizations Need AI Policies

Many organizations still lack formal guidelines for using AI tools, leaving employees to guess what’s permissible. It is our understanding – and our worry also – that most employees don’t even realize or understand which data they should or should not add to the AI models. This is why we advocate for the wide use and deployment of AI Policies in companies.

Here’s why AI policies matter:

- Education & Awareness: Ensures everyone in your organization understands the risks and best practices when interacting with AI.

- Risk Management: A solid policy helps prevent data leaks and breaches of confidentiality.

- Compliance: Aligns your company or legal practice with industry regulations and local laws—a must for highly regulated sectors.

- Consistency: Establish uniform standards so that everyone, from interns to senior partners, uses AI responsibly and avoids costly mistakes.

Stay tuned: We’ll be writing a longer article soon detailing the key components of an effective AI policy, with real-world examples to guide your organization’s strategy.

7. Real-World AI Policy Examples

There has been a lot of discussion on whether it should be forbidden to use AI models for work – in some countries it is even forbidden for everyone. I believe that this is the wrong way of dealing with this technology that we will not be able to stop. We should embrace AI technology but be very mindful how to use it. See below two examples of courts and lawyer organizations that believe you should not ban AI, but embrace it with correct guardrails.

Florida Bar’s AI Policy

The Florida Bar issued Ethics Opinion 24-1 on January 19, 2024, providing guidance on the use of generative AI by Florida attorneys (source link: 16).

Key points include (source: 1) :

- Lawyers may use generative AI in their practice, but must:

- Protect client confidentiality

- Provide accurate and competent services

- Avoid improper billing practices

- Comply with lawyer advertising restrictions

- Attorneys must research AI programs’ policies on data retention, data sharing, and self-learning to maintain client confidentiality

- Lawyers are responsible for their work product and must verify that AI use aligns with ethical obligations.

- Informed client consent is recommended when using third-party AI programs that involve disclosure of confidential information.

Delaware Supreme Court’s AI Policy

The Delaware Supreme Court adopted an interim policy on October 21, 2024, governing the use of generative AI by judicial officers and court personnel (see full text here: 7). Key aspects (source: 11) include:

- The policy allows the use of approved generative AI tools by judicial branch officers, employees, law clerks, interns, externs, and volunteers.

- Users of AI remain responsible for the output and must ensure accuracy.

- Training on AI capabilities and limitations is required before use.

- AI should not influence judicial decisions or replace human judgment.

- AI use must comply with existing laws and judicial branch policies.

- Only approved AI tools are permitted on state technology resources.

Both policies aim to balance the benefits of AI in legal practice with ethical considerations and safeguards to protect client interests and maintain the integrity of the legal system. More and more courses are being offered for lawyers & judges explaining the risks and how to avoid them.

The above examples contains external information gathered from Perplexity – mentioning all sources used for the information shared. We have not verified the AI policies or the (latest information relating thereto) in detail at this moment.

8. Embrace AI—Responsibly

From streamlining legal tasks, writing articles to supporting creative brainstorming, generative AI offers enormous advantages. However, that conversation with the lawyer who unknowingly uploaded full client files to a free-tier AI tool reminds us that we to improve our understanding how AI models handle our data.

- Read the Terms: Familiarize yourself with the data usage policies of each AI platform you use.

- Choose Wisely: If data sensitivity is high, consider enterprise solutions or what we at this time advise most legal professionals: do not share sensitive data.

- Use Caution: Always think twice before uploading potentially sensitive content.

- Establish AI Policies: Protect your organization, employees, and clients by setting clear, enforceable guidelines.

Final Thoughts

AI is transforming the way we work – but it’s also transforming how data can move beyond our control. Actively keep track of how your content is used, whether you’re on the free or paid version of ChatGPT, Claude or Perplexity

The lesson? We need to stay informed, be cautious and be proactive. That way, you can use the power of AI without compromising your most sensitive information. Keep an eye out for our upcoming in-depth article on building AI policies that can guide you and your team toward ethical and secure usage of these exciting new technologies.

Disclaimer: This article is a research project, provides general information about AI data usage and does not constitute legal advice.

I wrote this article further to my post on LinkedIn on this subject. If you have any further questions about the above, contact me via lowa@amstlegal.com or set up a meeting directly here .

Latest Posts

Vacature Jurist Amsterdam – AMST Legal

AMST Legal is op zoek naar een Commercial Legal Counsel / Bedrijfsjurist voor ons groeiende juridisch advieskantoor in Amsterdam. In deze hybride rol werk...

Commercial Legal Counsel Amsterdam – Hybrid Position in Tech and Renewables

AMST Legal is looking for a Commercial Legal Counsel Amsterdam / Bedrijfsjurist Amsterdam. Join our growing legal consultancy company AMST Legal based in...